Oliver Mitchell discusses machine sensing at the RobotLab forum on cybersecurity and machines.

Abruptly, I entered the cab escaping the arctic weather to confront something worse than freezing rain – body odor. Luckily, another taxi pulled to the curb, and I continued my journey to last Tuesday’s RobotLab forum on Cybersecurity & Machines. Moderating a panel at the new Global Cyber Center, with John Frankel of ffVC and Guy Franklin of SOSA, I noticed my temperature rising with the discussion of malicious artificial intelligence turning autonomous vehicles and humanoids on their organic masters. While their hyperbole smacked of science fiction, the premise of nefarious actors hacking into nuclear reactors, urban infrastructure, and industrial complexes is very real. Equally as important as building firewalls is engaging the humans who maintain them in a state of alertness and vigilance from potential threats.

Often when discussing sensors in unmanned systems, one thinks first of vision followed by force and acoustical sensing. However, in nature, the olfactory system in animals remains supreme. Alexandra Horowitz of Barnard College has studied extensively the heroic feats of canine noses and proudly exclaimed that their “incredible detection abilities evidenced every day by search-and-rescue, narcotics- and explosives-detection dogs, as well as everything from bedbug detection dogs to dogs who can find the scat of orcas in Puget Sound.”

In a recent Washington Post article, Professor John McGann of Rutgers University, a proponent of the under-recognized human olfaction capabilities, claimed that “humans are about as sensitive as dogs at detecting amyl acetate, a chemical with a banana odor. We’re better than mice at detecting a smelly compound in human blood. And one widely cited 2014 estimate, though later critiqued, suggested that humans can detect 1 trillion different scents.”

Knowing full well the smells of New York City, machine sensing of odors could be a very powerful tool for computers to protect mankind from harmful behaviors, including stopping psychopaths, distracted drivers, and terminal disease.

Machine sensing and machine learning

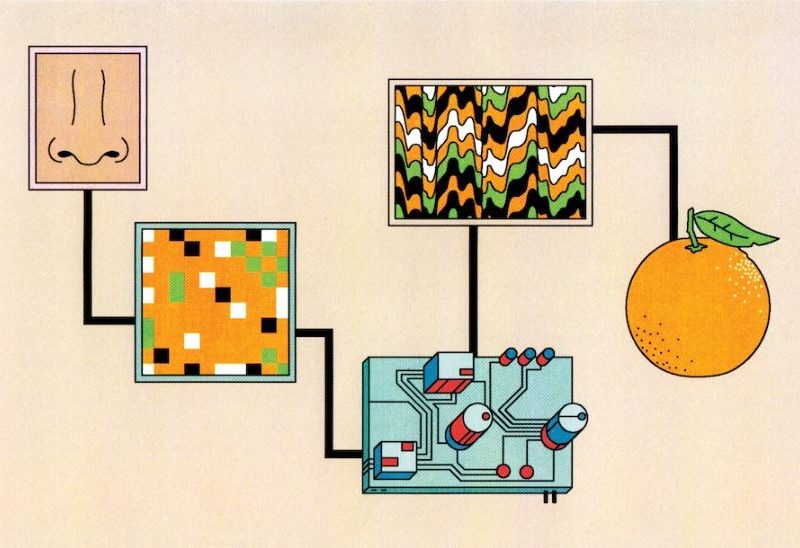

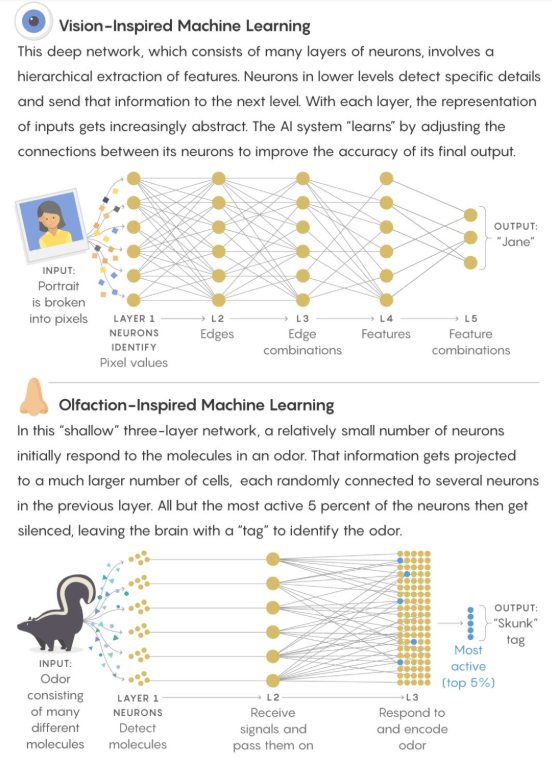

To date, the challenge with virtual olfaction is embedding such techniques within a sensing network. Most deep learning systems are modeled after the cerebral processing of the human brain and, unlike the hierarchical visual cortex, the olfactory neurons are organized randomly.

To prioritize the data within a computer model, scientists are mapping how fruit flies process odors. The olfactory circuitry of a typical drosophila melanogaste engages 50 neurons that receive input from over 2,000 different receptors or Kenyon cells that decipher scents.

Saket Navlakha, a computer scientist at the Salk Institute, explained the advantage of this machine sensing expansion: “Let’s say you have 1,000 people, and you stuff them into a room and try to organize them by hobby. Sure, in this crowded space, you might be able to find some way to structure these people into their groups. But now, say you spread them out on a football field. You have all this extra space to play around with and structure your data.”

It is the fly’s ability to lengthen the data that Saket is utilizing in his machine learning algorithms. Saket cites that the flies’ efficiency in reducing the noise by 95 percent, and only tagging the remaining Keyon cells, is critical to deploying commercial-ready olfactory neural networks.

To better understand potential olfactory applications for self-driving cars, I caught up with Dr. Yigal Sharon of Moodify. The Israeli startup was founded by Dr. Sharon, a psychotherapist and world-renowned researcher of people’s emotional connections with fragrances. The scientist and his team, made up of neuroscientists and engineers, are currently beta-testing the first-ever “empathetic car,” complete with an array of vision-based sensors and fragrance emitters.

Moodify’s founder described to me how their machine sensing system actively monitors and changes cabin comfort based upon driver and passenger conditions. “Since the explicit perception of smell is very much subjective, different smells can evoke different sensations for different people. Using computer vision, we detect changes in facial features that are caused uniquely by (subjectively perceived) aversive odors. Using AI, we can create a smart diffuser that will be triggered only when needed and by using continuous feedback we can adjust the volume of fragrance used to create optimal experience,” boasted the entrepreneur.

The first market for their empathetic car is fatigued driving. Similar to smelling salts, Moodify’s device actively wakes drowsy drivers with foul odors to steer off catastrophic accidents. The National Highway Traffic Safety Administration estimates that close to 20 percent of all fatal crashes are the result of exhaustion. This statistic is even more alarming with professional drivers, as 85 percent of commercial drivers have been diagnosed with obstructive sleep apnea, according to The American Transportation Research Institute.

While the majority of available solutions on the market utilize the radio and fan to startle inattentive motorists, smell is more immediate. Dr. Sharon proclaimed, “The human nose is the only sensory organ that transmits incoming signals and stimuli unfiltered to the limbic system. The reaction time of the limbic system is a few milliseconds. Even before the awareness of the fragrances is activated, processes are triggered that make people feel emotions after a very short time.”

The psychotherapist expounded: “Fragrances have an immense effect on our feelings and behavior both in an explicit and an implicit manner. The interesting thing about smell is that it affects us, not only in how we make decisions, but it also has a biological effect that most of the time we are not aware of. This is a new field that offers amazing opportunities.”

Dr. Sharon recognizes that the road to mass implementation of vehicle olfaction is currently slow going. “Nowadays, most automotive companies concentrate on the core problems: connectivity, autonomous driving, speech intelligibility and of course batteries,” he said. “These activities are engineer-driven and dominated.”

He joked that “engineers don’t think very much about smell in cars except in France and in the marketing divisions!” The Israeli innovator is not deterred and remains optimistic about machine sensing. “For some years now, we have been exposed to the influence of smell on purchasing decisions and well-being,” he said.

Riding in a cab home from RobotLab, with Dr. Sharon’s pitch ringing in my ears, I recalled Chris Farley’s punchline: “In the land of the skunks, he who has half a nose is king.”

A Chevy Bolt concept vehicle with no steering wheel.

Source: Machine sensing, smell to improve the self-driving experience