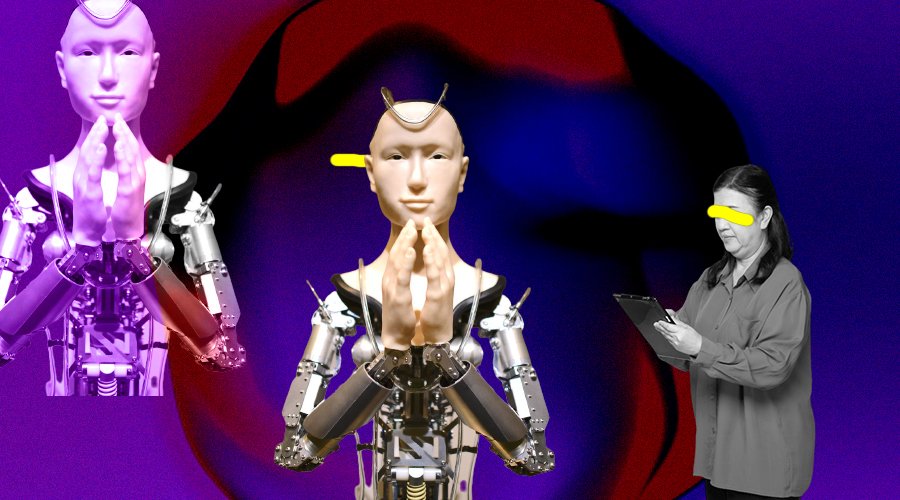

Due to continuous advancement in AI technology and research robots are now getting the unique sixth sense

What is sixth sense technology?

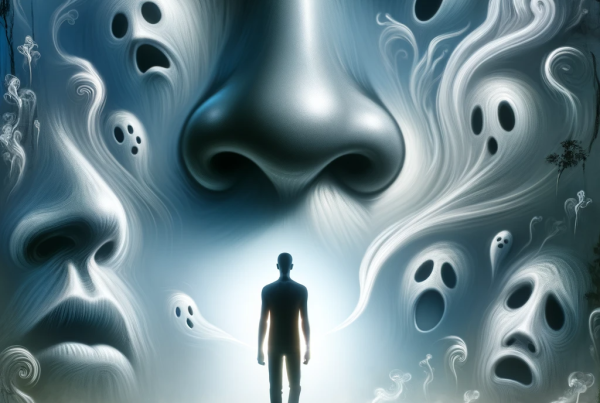

Some experts believe that humans are born with a sixth sense. It’s the sense of proprioception, which is the perception or awareness of one’s own body’s position and movement. This sensation aids in the coordination of our motions.

Solid-state sensors, which have historically been utilised in robotics, are unable to record the high-dimensional deformations of soft systems, making this sophisticated sense difficult to replicate in robots. Embedded soft resistive sensors, on the other hand, have the ability to solve this problem. With the rapid advancement in AI technologies and researches, the discovery of new methodologies involving a variety of sensory material, and machine-learning algorithms, scientists are getting closer to conquering the difficulty of using this approach.

The generic coding for the integration of sixth sense technologies on the robot is done by multiple software. Sixth sense technology is a view of the notion of augmented reality. Sixth Sense recognises the items in our environment and presents information about them in a real-time context. The user can interact with the content through hand movements thanks to the sixth sense technology. When compared to text and graphic-based user interfaces, this is a much more efficient method.

After the robot is built and the sensors are installed, the next step is to integrate digital information into the actual world by programming the robot to take image recognition inputs, transforming it into a sixth sense robot and Python was used in conjunction with code from the Arduino IDE to complete this task.

How does a Sixth Sense Robot work?

In the sense of smell and taste, robots with chemical sensors could be far more precise than humans, but building in proprioception, the robot’s awareness of itself and its body, is far more challenging and is a big reason why humanoid robots are so tough to get right.

Tiny modifications can make a big difference in human-robot interaction, wearable robotics, and sensitive applications like surgery.

In the case of hard robotics, this is usually solved by putting a number of strain and pressure sensors in each joint, which allow the robot to figure out where its limbs are. This is fine for rigid robots with a limited number of joints, but it is insufficient for softer, more flexible robots.

Roboticists are torn between having a large, complicated array of sensors for every degree of freedom in a robot’s mobility and having limited proprioception skills. This challenge is being addressed with new solutions, which often involve new arrays of sensory material and machine-learning algorithms to fill in the gaps.

They discuss the use of soft sensors spread at random through a robotic finger in a recent study in Science Robotics. Rather than depending on data from a restricted number of places, this placement is similar to the ongoing adaptation of sensors in humans and animals.

The sensors enable the soft robot to respond to touch and pressure in a variety of locations, creating a map of itself as it contorts into difficult poses. A motion capture system observes the finger as it travels around, and the machine-learning algorithm interprets the signals from the randomly scattered sensors. After training the robot’s neural network, it can link sensor feedback with the motion-capture system’s detected finger position, which may then be discarded. The robot watches its own movements to figure out what shapes its soft body can take and then translates those shapes into the language of these soft sensors.

The benefits of this approach include the robots’ ability to predict complex motions and forces experienced by the soft robot (which is impossible with traditional methods) and also the fact that it can be applied to a variety of actuators and sensors.

The application of machine learning allows roboticists to create a reliable model for this complicated, non-linear system of actuator motions, which is difficult to achieve by just calculating the soft-expected bot’s motion. It also mirrors the human proprioception system, which is based on redundant sensors that fluctuate in position as we mature.

Machine learning techniques are revolutionising robotics in ways that have never been seen before. Combining these with our knowledge of how humans and other animals perceive and interact with the world around us is pushing robotics closer to being truly flexible and adaptable, and eventually omnipresent.

Source: Robots Developing the Unique Sixth Sense, Thanks to Advanced Research