Researchers at the National University of Singapore recently demonstrated the advantages of using neuromorphic sensor fusion to help robots grip and identify objects.

It’s just one of a number of interesting projects they’ve been working on including developing a new protocol for transmitting tactile data, building a neuromorphic tactile fingertip, and developing new visual-tactile datasets for the development of better learning systems.

Because the technology uses address-events and spiking neural networks it is extremely power efficient: 50 times more using one of the Intel Loihi neuromorphic chips than a GPU. However, what’s particularly elegant about this work is that it points the way towards neuromorphic technology as a means of efficiently integrating — and extracting meaningfrom — many different sensors for complex tasks in power-constrained systems.

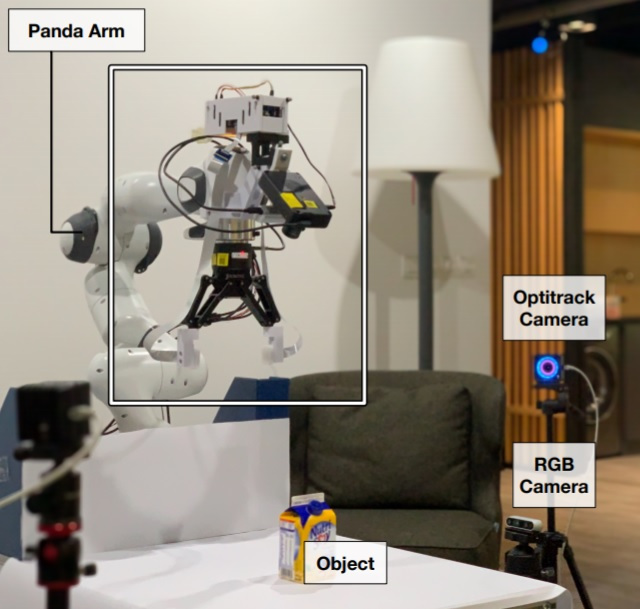

The new tactile sensor they used, NeuTouch, consists of an array of 39 taxels (tactile pixels) and the movement is transduced using a graphene-based piezo-resistive layer; you can think as this as the front of the robot’s fingertip. It’s covered with an artificial skin, called Ecoflex, that helps to amplify the stimuli, and is supported by 3-D printed “bone”. The fingertips can then be added to grippers.

But what makes these sensors novel is the way they communicate their information. This is not done serially, with each sensor reporting its state sequentially in a given time step, because this is too slow. Nor are the sensor arrays laid out as a mesh, because — although fine for many electronic systems — for the robust robot-skin they are trying to create this is too risky; if the skin were damaged, it could potentially take out too many sensors.

Instead, Benjamin Tee and his colleagues developed the Asynchronously Coded Electronic Skin, or ACES.

This uses a single conductor to carry a signal from (potentially) many tens of thousands of receptors. The technique has some similarities with address-event representation (AER) in that the sensor does use events. Essentially each sensor emits a positive or negative spike if the pressure on it changes beyond a certain threshold. Like AER, these spikes are sent asynchronously based only on what is happen in the real world; there is no clock to regulate them. However, rather than point-to point routing, it’s a many-to-one network. Each sensor’s “spike” is actually as unique code (a series of spikes) and, because the spikes are both asynchronous and relatively spares, they can travel down a single wire and be decorrelated into individual spike trains later.

Mixed feelings

In the experiment they published last year, the group were able to combine data from both pressure and temperature sensors within the tactile array, but since then they’ve been able to show more sophisticated sensor fusion. Specifically, Harold Soh’s group have incorporated the NeuTouch finger tips with a Prophesee event-based vision sensor and used the data generated (both separately and together) to train a spike-based network that approximates back propagation [2].

After being trained to pick up various containers with different amounts of liquid (such as cans, bottles, and the soy milk shown in Figure 1), the robot was able not only to determine what it was lifting, but – within 30g – how much it weighed.

You can see a nice (and very Covid-safe!) video of this work below.

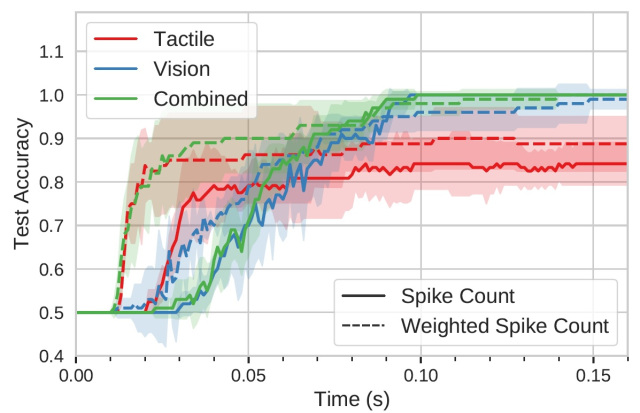

The vision sensor data was able to classify objects more successfully on its own than the researchers were expecting: the weight of the objects was visible because of containers’ transparency and the way they deformed. Nevertheless, the tactile data did improve improve the accuracy.

Perhaps more importantly, however, the combination of the two sensors gave the robot a real advantage when doing so-called slip tests. This is where the robot is made to grip with an amount of pressure that is not quite enough take the objects weight securely, so it has a tendency to drop down. Here, the use of both tactile and visual data seems to really help in identifying a slip quickly (see Figure 2).

This is partly thanks to a quantity called weighted spike count that they use in their models to encourage early classification. Functionally this could improve a machine’s reaction time, giving it a better chance of minimizing the likelihood and consequences of dropping an object.

The group have made their datasets available to other researchers who might want to work on improving the learning models used.

Power benefits

For these experiments, training was done using conventional technology, but the network was then run on the Intel Loihi chip. The published results show a 50x improvement in power efficiency, but that’s already been improved upon.

According to NUS’s Harold Soh, since the paper came out, “…We’ve been fine-tuning our neural models and analyses. Our most recent slip detection model uses 1900x less power when run on neuromorphic hardware compared to a GPU, while retaining inference speed and accuracy. Our focus now is on translating this low-level performance to better robot behaviors on higher-level tasks, such as object pick-and-place and human-robot handovers. More broadly, we believe event-driven multi-sensory intelligence to be an important step towards trustworthy robots that we feel comfortable working with.”

Source: Neuromorphic Sensor Fusion Grants Robots Precise Sense of Touch – EE Times Asia