I’m sitting in a spacious room with the lights dimmed, surrounded by screens and speakers, and awaiting an immersive experience to awaken my senses. And not just the obvious ones.

I’m told this is a one-of-a-kind research space, equipped with nearly 100 line array speakers and that it can create a sphere sound experience that moves 360 degrees in any direction.

I’m asked to put two fingers from my right hand into a styrofoam contraption fitted especially for them. My fingers are placed on top of plates inside the device. I hear the sounds of a forest.

“If you take a moment and notice how the sound travels, you can get an idea of what we can do with sounds in space,” says Iddo Wald, chief design & technology officer at the Baruch Ivcher Institute for Brain, Cognition & Technology lab in Reichman University (formerly IDC) where the experience is taking place.

Suddenly, I hear the deep, short “hoos” of an owl, followed by rustling trees and the wind blowing. There’s the sound of rain, deep and soothing and I actually – literally – feel it in my fingers. I’m asked which side the sound is coming from and I tell Wald and Amber Maimon, the lab’s academic manager that I feel like I’m releasing rain from my fingertips.

“The ability to locate sound in space — we’re kind of imitating what happens in the ears,” Wald explains, “It’s like the difference between two points that help you basically say [whether] it’s here, or there. We kind of simulate that through touch — which allows us to put information in space. It’s a very unique feature, that you can actually refer, through vibration and touch, to 3D space. And that’s one thing that has a lot of implications and applications and a lot of things that we can do with that.”

In other words, when you feel the sound, you could tell what direction it was coming from, without even having to hear it. As I sat in the middle of the multisensory room, the rain came around me from all sides. And then suddenly I felt vibrations in my fingertips at my right. So I immediately knew that the sound was coming from that direction.

Wald tells me a story of a student who had been an Air Force pilot and told him he could relate to the idea of using touch to replace visuals and hearing. “He talked about how when you’re being targeted by an aircraft, your hearing and sight are occupied. I told him that that’s exactly why we want to use touch as a sense that can communicate to you that there is a front now and where it is coming from.”

“We’re also talking about enhancing the touch so that the person using it to hear can help differentiate different sounds or voices. That’s what they call the cocktail party effect,” he adds. The cocktail party effect, as defined by The American Academy Of Audiology, is the ability to focus one’s attention a particular stimulus while filtering out a range of other stimuli, or noise.

Prof. Amir Amedi, who heads the BCT lab, is considered one of Israel’s top brain scientists and a leader in fields like bran plasticity, multisensory integration, brain imaging, and brain rehab. What I’m experiencing is exactly the kind of thing he has pioneered and won numerous awards for — helping blind people to see through sounds and for the deaf or hearing-impaired to “hear” through their fingers.

A tour with Wald, Academic Lab Manager Amber Maimon, and later, Amedi himself, has given this writer just a taste of the extensive projects happening in the multisensory ambisonic room, where students and researchers come to study spatial sound processing in the brain using fMRI, integration of senses, and connecting speech to touch, and elsewhere in the lab.

Amedi may be best known for EyeMusic, a novel visual-to-auditory sensory-substitution device (SSD) for the blind that provides shape and color information. Essentially, EyeMusic is an algorithm that can translate an image into sound, Wald explains. Essentially, a person without sight can “hear” an image in a distinct piece of music helping them identify the image with that piece of music.

SSDs are systems that allow the conversion of input from one sense to another (hearing to touch, sight to hearing, etc.).

Amedi and other researchers on the project “were able to get to a state where they could take a camera, place it on a blind person’s head — and someone who was blind from birth would be able to look at a bowl of green apples and pick up the red one. As if they were seeing it with their own eyes,” Wald says.

This year, Prof. Amedi headed a team of researchers from the BCT lab that developed a special technology helping people understand speech and sound by using touch. (In the future, the tech will also allow them to detect their location.) The technology is based on the premise that everything people experience in life is multi-sensory, and therefore the information transmitted to the brain is received in several different areas. If one sense is not working properly, another sense can be trained to cover it.

In an experiment, 40 subjects with normal hearing were asked to repeat sentences that have been distorted, simulating hearing via a cochlear implant in a noisy environment. In some cases, vibrations on the fingertips corresponding to lower speech frequencies were added to the sentences.

The researchers developed a system that converts sound frequencies to vibrations, the SSD that was experienced in the multisensory ambisonic room.

Tech to combat anxiety

Amedi and Maimon have been working for some time on combining the senses with signals from the body in order to reduce stress and anxiety, Maimon, more specifically, is studying technologies related to ATT and other techniques for her postdoctoral research on the link between mental and physical health.

“The whole experience of receiving sounds that move in 3D space and come close or go far is based on a cognitive-based therapy called ATT (attention training technique.) And it was shown in the literature that it reduced stress and anxiety,” Amedi tells NoCamels.

“So relaxing sounds can help a little bit to reduce stress but it’s very varied — it’s not a huge effect. ATT allows you to control your attention to the outside world and the internal world. If you think about it, anxiety is inability to control your attention to where you are putting your mind or your consciousness. It’s something much deeper that comes from psychological practices. The problem is that you have to go to a therapist and he would put different sounds and also use environmental sounds like if a car moves or there is a bird outside. This is the first effort to optimize this and create the experience — it’s part of a bigger project we’re doing with the Shaarei Tzedek Canter Center and to develop new methods to reduce claustrophobia,” says Amedi.

Amedi has partnered with Dr. Ben Corn, deputy director of the Shaarei Tzedek Medical Center in Jerusalem and chairman of the Department of Radiation Oncology, to create multisensory devices for the waiting, treatment, and faculty areas of a cancer center at Shaarei Tzedek set to open this summer. Inventions being developed for the center include playful 3D glasses that “can enable you to feel that you’re in a completely different space and not going through radiotherapy,” says Amedi, and breathing sensors that help the patient to relax and provide soothing visual, sound, and tactile methods for breathing exercises.

“Then we thought it could be like really cool to try and combine the speech to touch project in which we try to help people that have a hearing impairment to improve their speech hearing,” Amedi adds, “And this is basically how we created the first system that enables you to understand 3D space using touch. So you can not only know the content but also the location. And this is very important for a lot of things. For example, for people with hearing problems of their cochlear implant. Their main problem is that they cannot understand speech when there are several sources of sand or noise.” These in-ear recordings will combine ways to help different people hear the same sounds coming from different parts of the room.

Brain scanning

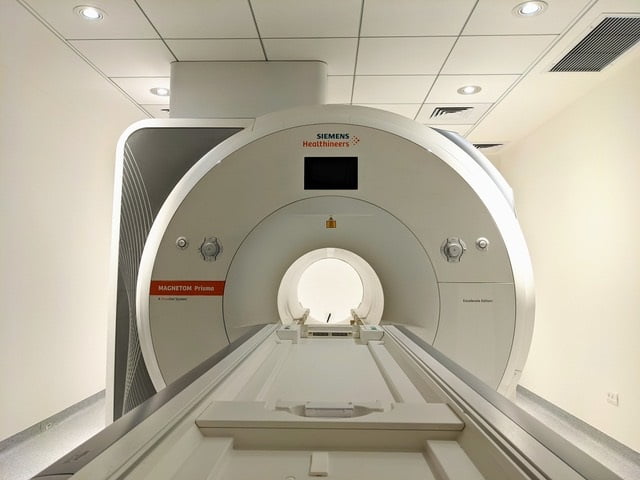

The new Ruth and Meir Rosental Brain Imaging Center, an MRI unit in the basement of the BCT lab was a place that we didn’t get a chance to visit on the tour.

Maimon says, “It’s starting to run now. We’re starting to collaborate with researchers here and outside of the university.”

“There are only a few research units like this in the country — we only have it for research, we don’t use it [for actual patient tests] — but there aren’t so many of them, its good to know we can run studies in-house instead of having to go elsewhere,” Wald adds, “This room that we have, we also have a lot of the tools that we develop and we can take some of the experiences that we developed, design tools, and export them out to different places, be it a wearable or like things that people can use in like their homes or like specific different environments to working on or into the MRI. So we can study what happens in the brains of people when they experienced the things that we do.”

“The fact that its associated with a specific lab, I don’t think there’s anything like that in Israel,” he adds.

Last year, Amedi led a team that helped train a 50-year-old blind man to “see” by ear and found that neural circuits in his brain formed so-called topographic maps, a type of brain organization previously thought to emerge only in infancy.

And this is just one of dozens of projects happening at the Baruch Ivcher Institute For Brain, Cognition, and Technology at Reichman University.

“There’s a very famous expression by [US neuroscientist] Paul Bach-y-rita, who has done lots of work on sensory substitution devices and he says, ‘We see with the brain, not the eyes. That generally sums up what we do here.” sas Maimon.

Source: Touch To Hear? Sound To See? This Israeli Lab Is Reprogramming The Senses